What did students learn during a science communication course? Not much.

By Teodora Stoica

Author(s) and Year: Robert S. Capers, Anne Oeldorf-Hirsch, Robert Wyss, Kevin R. Burgio and Margaret A. Rubega; 2022

Journal: Frontiers in Communication

| TL;DR: Trainee communication behavior was evaluated during an intensive science communication course. Use of jargon remained the same, and use of invaluable narrative techniques did not increase. The authors concluded that objective tools can be used to measure the success of communication training programs, albeit this one did not reveal significant change in communication techniques. Why I chose this paper: In 2020, the results of an experiment testing whether science communication (scicomm) training works caused quite a kerfuffle in the scicomm world. Understandably so, since the authors claimed that a 15-week intensive course had no impact on improving scicomm skills. Besides being discouraging, these results could influence the demolishing and defunding future scicomm training programs. Why did the authors make this claim? I chose the current paper because it delves deeper into the original data in an effort to discover why the training was unsuccessful. |

Current science communication training is not very effective

“Effective science communication” is a vague term. What are we measuring, and how? Current training programs vary from mere hours to full-fledged degrees, each having different motivations – from de-jargonizing tactics to the need to understand audiences. But do they work? In 2020, Rubega et al conducted a 3-year experiment to test whether training graduate students in the art of scicomm would impact how they presented their data to an audience of undergraduate students. The surprising and disappointing answer? It didn’t. Frustratingly, the authors scratched their heads and asked – why not?!

The Details

The current Capers et al paper is a deep dive into the data of the original 3-year experiment. The authors used a word-counting tool to assess the number of jargon words each trainee uses, as well as counting the number of speech behaviors (i.e., pausing, stuttering) the trainee used while explaining their science concept, to evaluate whether graduate student performance changed in particular areas in response to the training. The graduate-level scicomm course was taught once a year (for 3 years), and 29 trainees were matched to controls who did not take the course and were evaluated at the same time. The training had three simple goals:

1. Clarity: Provide information that is clear and easy to understand

2. Credibility: Appear knowledgeable and trustworthy

3. Engagement: Make the audience interested in the subject

The course consisted of 15 weeks, 4 of which highlighted the role of scientists and journalists in public communication of science, followed by 11 weeks of active practice and post practice reflection on science communication performance. Each student was then interviewed and recorded twice by journalism students (once at the beginning of training, and once after), and each journalism student produced a short news story based on the interview. The trainees then watched the resulting videos as a group and asked questions of their peers’ videos like, “Is the story clear?” and “Were analogies used by students useful?” Graduate students recruited as controls were matched closely to experimental subjects on age, gender and ethnicity.

At the beginning and end of the semester (before and after training), both experimental subjects and controls were asked “How does the scientific process work” and these before and after videos were shown to a large class of undergraduates in a Communications class. These student evaluators assessed trainees’ communication success in the three areas of Clarity, Credibility and Engagement. The video transcripts were also analyzed for body language and were coded for:

1. Overall language use

2. Jargon used

3. Use of metaphors, analogies, and stories

The Results

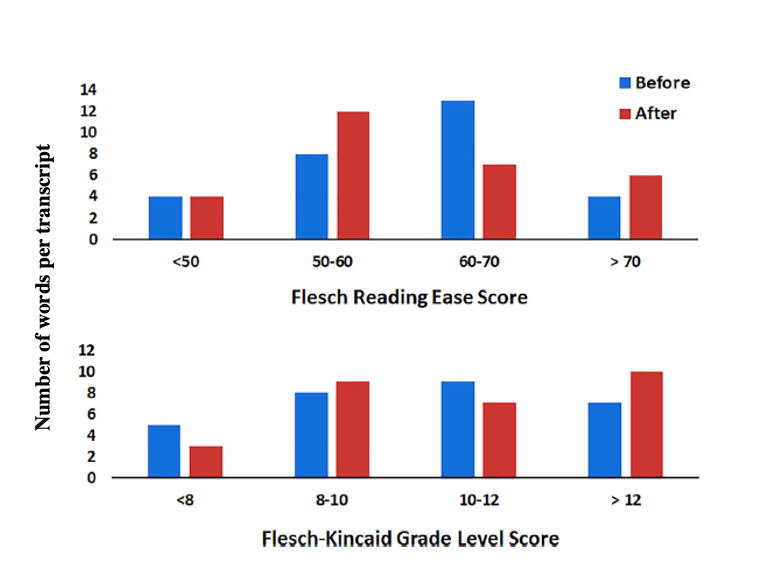

Word analysis data showed that analytical words increased significantly , indicating that trainees used more complex, rather than simpler speech after training. Trainees did not simplify the way they spoke to an audience after training, as indicated by similar before and after scores on the ease of reading scores (Flesch Reading Ease score) and grade level assignments of transcripts before (blue) and after (red) science communication training (Flesch-Kincaid Grade level Score) (Figure 1).

Credibility

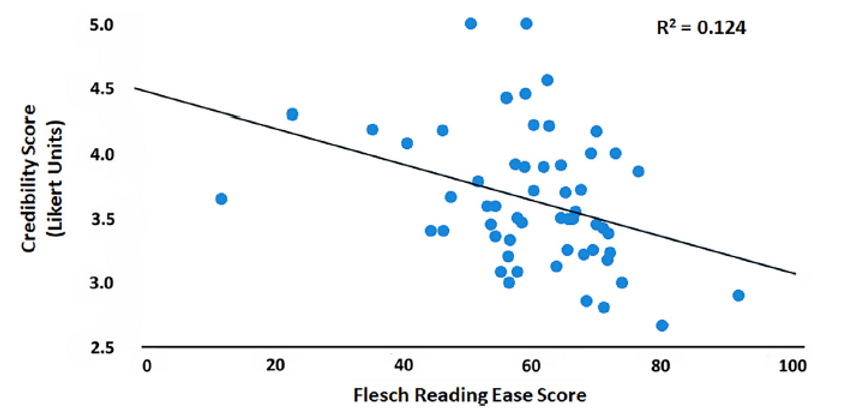

Transcript data also indicated that the easier a transcript was to read, the less the audience felt the subject being spoken about was credible, or relevant to them (Figure 2).

Jargon Word Use

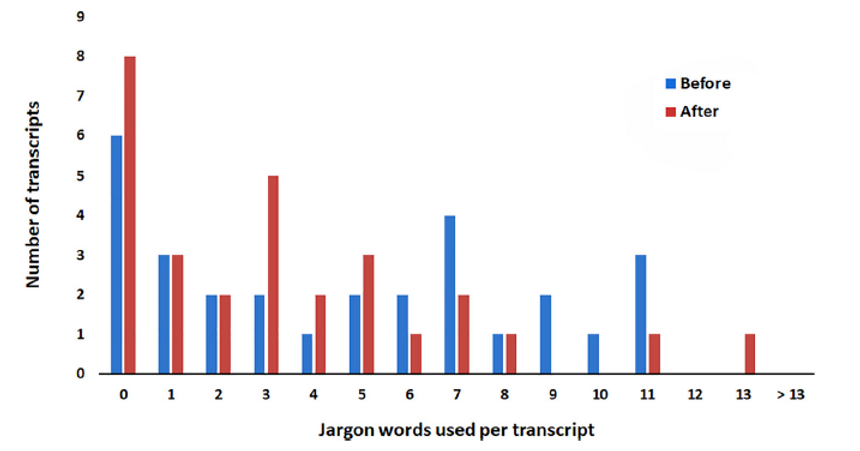

Although trainees used similar amounts of jargon words before and after the training (Figure 3), they increased their use of unique jargon words per transcript. In other words, trainees replaced their original jargon words with different jargon words after the training. Oy very.

Story-telling Ability

Trainees used a similar amount of storytelling before and after the training and this correlated positively with audience understanding. There was also no before/after training difference in Clarity, Credibility and Engagement as assessed by behaviors used while speaking [such as pausing, stuttering, smiling and dynamic speech tone], yet some of these behaviors did correlate to audience understanding. For example, pausing and stop-and-start speech related negatively to Clarity and Credibility while varying a speaker’s tone was positively related to Clarity, Credibility and Engagement.

The Impact

The results of this paper help explain why the audience did not find trainees from the earlier 2021 Rubega study easier to understand. After a semester-long science communication course, trainees peppered their speech with a similar amount of jargon words, used more, instead of less complex language to explain their concept and did not weave their scientific ideas into a story effectively.

Put simply, the trainees did not enact the techniques and behaviors that the training was trying to instill. The authors disheartenedly write, “It is not too much to say that we were astonished at the lack of strategic preparation,” and conclude that the students exhibited “an inflated sense of self-efficacy.”

Several lingering questions remain. How much scicomm practice, and what kind, is necessary before changes in communication behavior begin to take hold for trainees? How do we surpass the barrier that prevents science communication trainees from learning and applying useful scicomm techniques ? And finally, how do we refresh the notion that knowing how to communicate isn’t the same as actually doing so successfully? Only future rigorous studies can answer these pressing questions.

{Revised on August 29th}

Edited by Jacqueline Goldstein & Michael Golden

Cover image credit: Here